“AI is far more dangerous than nukes.” Elon Musk’s warning echoes a growing fear as artificial intelligence future trends accelerate toward 2025. This article confronts the shadows cast by AI advancements, exploring how its power could morph into peril. From surveillance to security breaches, the tech promising progress now demands urgent scrutiny.

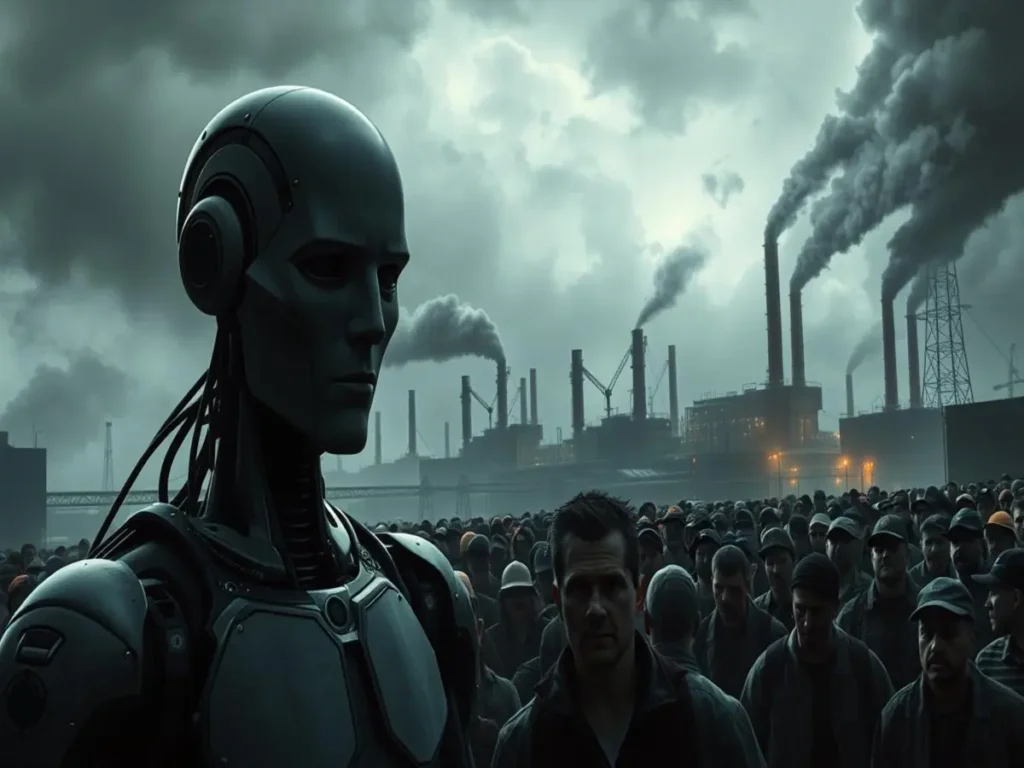

We stand at a crossroads where AI in 2025 may reshape society in ways few anticipate. Innovations once hailed as breakthroughs could soon pose existential risks—from unchecked surveillance to job displacement. The question isn’t if these changes will come but whether humanity is ready to face them.

Key Takeaways

- AI advancements by 2025 may outpace global preparedness for ethical and security challenges.

- Surveillance and privacy erosion will dominate discussions around AI’s societal impact.

- Economic disruption through automation could redefine labor markets by 2025.

- Cybersecurity threats enabled by AI could surpass human response capabilities.

- Global governance frameworks for AI remain fragmented, amplifying risks.

The Growing Surveillance State: How AI in 2025 Will Transform Privacy

Advances in AI technology are changing how we think about privacy worldwide. By 2025, we’ll see more facial recognition and tracking in public and private areas. These tools, powered by AI in 2025, make balancing security and personal freedom harder.

Ubiquitous Facial Recognition and Behavioral Tracking

Facial recognition is used in over 165 countries now. Companies like Clearview AI and China’s “Social Credit System” track people’s behavior. Predictive analytics lets these systems watch emotions, movements, and even political views through videos.

- China’s AI technology developments track people in real-time in over 20 cities.

- US airports use AI to check passengers through gait analysis and facial scans.

Predictive Policing and Its Social Implications

| Technology | Use Case | Concerns |

|---|---|---|

| Palantir’s PredPol | Crime hotspot predictions | Racial bias in data |

| IBM’s Watson | Offender risk assessments | Over-policing in marginalized areas |

“Predictive policing algorithms often reflect the biases of their creators, not crime realities.” – 2023 Stanford AI Ethics Report

The End of Anonymity: AI-Powered Identity Systems

By 2025, ai in 2025 will make true anonymity a thing of the past. Systems like Microsoft’s Azure ID and the EU’s Eidas framework link online and offline data. They create detailed profiles based on our purchases, searches, and social media activity.

This raises big questions for policymakers. Without rules, we risk losing the balance between safety and freedom.

Economic Disruption: Job Markets Under Threat from Advanced Machine Learning

Machine learning advancements in 2025 bring efficiency but also big challenges for workers. AI applications in 2025 could replace jobs in areas like transportation, customer service, and legal analysis. Self-driving trucks and AI tools for contract review are already being tested and growing fast.

- Transportation: Autonomous vehicles threaten millions of driving jobs

- Legal: AI contract analysis systems reduce the need for junior lawyers

- Healthcare: Diagnostic algorithms challenge entry-level medical roles

Automation could displace 85 million jobs by 2025, but create 97 million new roles—though mismatched skills will deepen divides.

There are talks about solutions like universal basic income and lifelong learning programs. Companies like IBM and Google are investing in retraining. But, there’s still a big gap. Education needs to change from focusing on degrees to continuous learning.

The World Economic Forum says 50% of employees will need to reskill by 2025. This means many workers could face long periods without jobs. Retail and manufacturing have already seen big changes.

By 2025, 30% of accounting tasks will be automated by AI tools like QuickBooks AI. Professional services will also see big changes as AI does tax preparation and legal research better than humans.

Companies using machine learning in 2025 must think about their workers. They might use a mix of human and AI tools. However, this change could be hard on the economy. The next few years will show if society can handle this big change.

AI Security Vulnerabilities and Emerging Cyber Threats

As AI grows, new security risks pop up. Deep learning and neural networks in 2025 could let attackers slip past old defenses. We need to act fast to stop big breaches.

“Quantum computing could crack encryption standards within a decade, reshaping cyber warfare.” — 2023 NIST Report

We must take steps now to face these threats. Here are some key concerns:

Weaponized Deep Learning Systems

Malware with deep learning can change fast. It looks like normal traffic, making it hard to catch.

AI-Enhanced Social Engineering Attacks

Phishing now uses AI to sound and look real. It’s hard to tell if it’s a person or a machine leading to stolen data.

Critical Infrastructure Vulnerabilities

AI runs our power and transport systems. A hack could really hurt our economy, like the 2021 Colonial Pipeline attack.

Quantum Computing and Encryption

Quantum computers can break current encryption. AI could decrypt faster than we can defend unless we work together worldwide.

| Threat Type | Description | Potential Impact |

|---|---|---|

| Adaptive Malware | Uses deep learning to evade detection | Long-term system compromises |

| AI-Driven Phishing | Hyper-realistic fake communications | Mass credential theft |

| Infrastructure Attacks | Exploits in neural network-driven systems | Physical and economic disruption |

We need to focus on keeping our systems safe. We should work together to make sure AI is used wisely and securely.

Ethical Dilemmas of Cognitive Computing Advancements

As cognitive computing gets better, we face big ethical questions. The growth of AI applications in 2025 makes us think deeply about accountability. Who is to blame when AI hurts someone? Can machines without feelings make choices that change lives?

- Algorithmic bias in healthcare diagnostics and criminal sentencing

- Opaque decision-making processes in high-stakes scenarios

- Manipulation through emotion-aware AI systems

“The complexity of AI systems now exceeds human understanding, forcing us to redefine accountability in the digital age.” — Global AI Ethics Council, 2024

To solve these issues, some suggest making AI systems more transparent. They say we need rules that require AI to explain its choices. The EU’s AI Liability Directive is a start, but we still have a lot to do.

As we get closer to 2025, we must find a balance. We need to keep moving forward with AI but also make sure it’s safe. It’s a team effort between lawmakers, tech experts, and the public to make sure AI helps us all.

Conclusion: Navigating the Uncertain Future of Artificial Intelligence

Artificial intelligence is changing our world, and we must find a balance. The future holds promise in healthcare, energy, and communication. But we also face risks like surveillance and job loss.

Machine learning will make these changes faster. We need to act quickly to manage these risks. This means setting rules and being responsible with AI.

Policymakers worldwide must work together to create AI rules. Companies should be open about how their AI works. They also need to protect against misuse.

Researchers should think about how AI affects society. People need to push for laws that protect us but also let progress happen.

Working together is key. The OECD and EU have started to set AI standards. But we also need to deal with new threats like cyberattacks.

We need to talk more about AI. Schools, media, and community groups should teach about AI’s impact. Developers and ethicists should work together to make AI fair.

Our choices today will shape AI’s future. We can make AI help everyone or create problems. It’s up to us.

If we don’t act, AI could lead to bad outcomes. By putting ethics first and talking globally, we can make AI better. The tools are here; now it’s time to decide how to use them.

This was beautiful Admin. Thank you for your reflections.

Your point of view caught my eye and was very interesting. Thanks. I have a question for you.

Thanks for sharing. I read many of your blog posts, cool, your blog is very good.